Developers in Control

Patrick van Dissel

DPI

Development Process Innovation team

aka Build Tools Automation team

since 2012

~6 engineers

fully autonomous

TAXP

Demo

Features for users

Self-service within a limited framework

Choice between different maturity levels

Support different pipelines types

(eg. app, db, api)

Man on the Moon

Man on the Moon: Phase 1

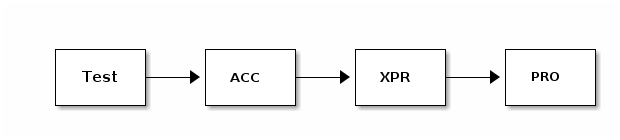

Every code push is auto deployed to test environment

Build and publish production patch fixes from

patches/*branchesTeam asks SRT to deploy to production

Man on the Moon: Phase 2

same as phase 1, except:

Team can deploy to production themselves

Man on the Moon: Phase 3

same as phase 2, except:

minus the patch production jobs

master == production

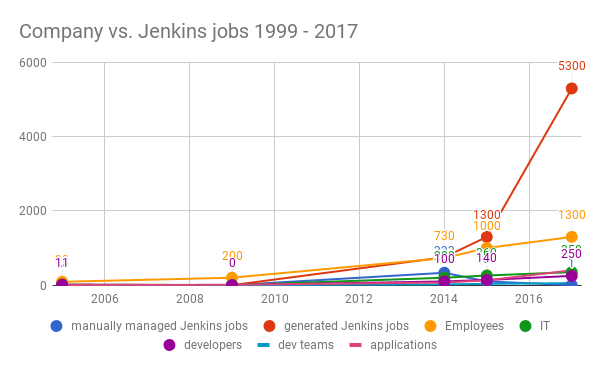

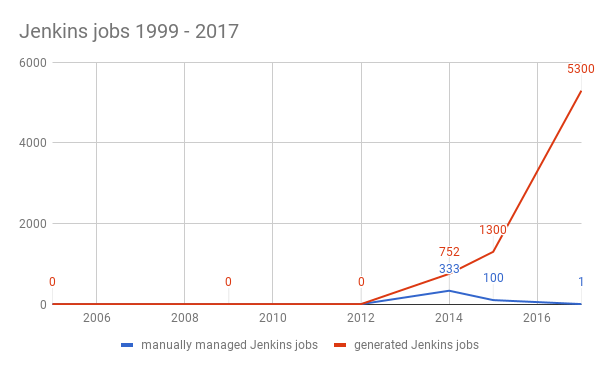

Jenkins @ bol.com

Jenkins @ bol.com

Jenkins @ bol.com

~5300 Jenkins jobs

1 master: VM, 6 cores, 47 GB ram

800 GB disk used for jobs8 build slaves: VM, 4 cores, 12 GB ram

~120 GB disk used for builds (per slave)1 build slave: metal, 24 cores, 64 GB ram

~30 GB disk used for builds159 browser test slaves: VM, 1 cores, 4GB ram

~20 GB disk used for builds (per slave)

More info

Users wanted

More freedom

More autonomy

Smaller releases

Releasing more often

But not everyone was ready

Demo

Principles

Feature Centric Development

Isolated runtime environment

Develop(ment)

Test

Accept(ance)

Environment = Production + Feature

Deploy to Production 'when it’s done'

Features for users

Almost fully self-service

Git repository manager (Stash)

Continuous Integration (Jenkins with JobDSL)

Isolated feature environments

Issue tracking (Jira)

Definition of Done checks

Mayfly @ bol.com

#1 team used Mayfly before DPI did

We develop Mayfly with Mayfly

25% of the projects developed with Mayfly

48 of 70+ teams develop with Mayfly

3 mesos masters metal, 8 core, 30 GB ram, HA

3 mesos slaves metal, 24 core, 256 GB ram, HA

More info

You build it, you run it, you love it

Fully Autonomous Teams

A service is owned by a team

A team owns one or more services

Integrations are done with a service account

work in progress

Continuous Delivery

Demo

Features for users

Fully self-service

Git repository manager

Continuous Integration

Isolated feature environments

(environment per branch/MR)Issue tracking, issue boards, milestones, …

Wiki, static-site hosting, …

Gitlab @ bol.com

~100 GB of repositories

3,719 repositories (1,335 forks)

15,196 merge-requests

2 app instances VM, 4 cores, 20 GB ram, HA

2 db instances VM, 4 cores, 6 GB ram, HA

2 redis instances VM, 2 cores, 4 GB ram, HA

3 sentinel instances VM, 2 cores, 4 GB ram, HA

2 runner instances VM, 2 cores, 12 GB ram

What about deployments?

Continuous Deployment

Demo

Features for users

Fully self-service

CI Integrations

(Jenkins, Travis CI, git, cron, docker registry, ..)Deployment Strategies

(red/black, rolling red/black, canary, custom)Monitoring Integrations

Manual Judgments

Role-based Access Control

Spinnaker @ bol.com

Running in Kubernetes

Deploying to Kubernetes

Focus on container based deployments

Artifact Management

What we want

Users should be able to

Manage their own service account

Claim a package namespace for their service (eg. com.bol.myservice)

Add remote repositories to be proxied

Which should be approved by security

Features for users

Fully self-service

hopefully future Artifactory version will support this out-of-the-box,

else we will write a layer around Artifactory

Artifactory @ bol.com

Since ~2012

~10.2 TB of artifacts

Of which 10.1 TB are deployables

756,833 artifacts

Maven, npm, bower, PyPI, gems

2 app instances VM, 4 cores, 16 GB ram, HF

2 db instances VM, 4 cores, 6 GB ram, HA

Code Quality

Users should be able to

Manage their own service account

Dashboards, Rules, Quality Profiles, Quality Gates

Be able to use SonarLint against all projects

Features for users

Fully self-service

hopefully sonarqube 6+ will support this out-of-the-box, else we will implement it into sonarqube, or we will write a layer around sonarqube

sonarqube @ bol.com

Since ~2015

~200 projects

1 app instance VM, 4 cores, 8 GB ram

2 db instances VM, 2 cores, 8 GB ram, HA

Build Support

Build Support

Build Support

Build Automation consultants

Best practices

Templates

Plugins

Resources

Thanks

Slides & code

ikoodi.nl/talks

github.com/pvdissel/talks

Want to help? banen.bol.com